2.7 KiB

Read-only benchmark

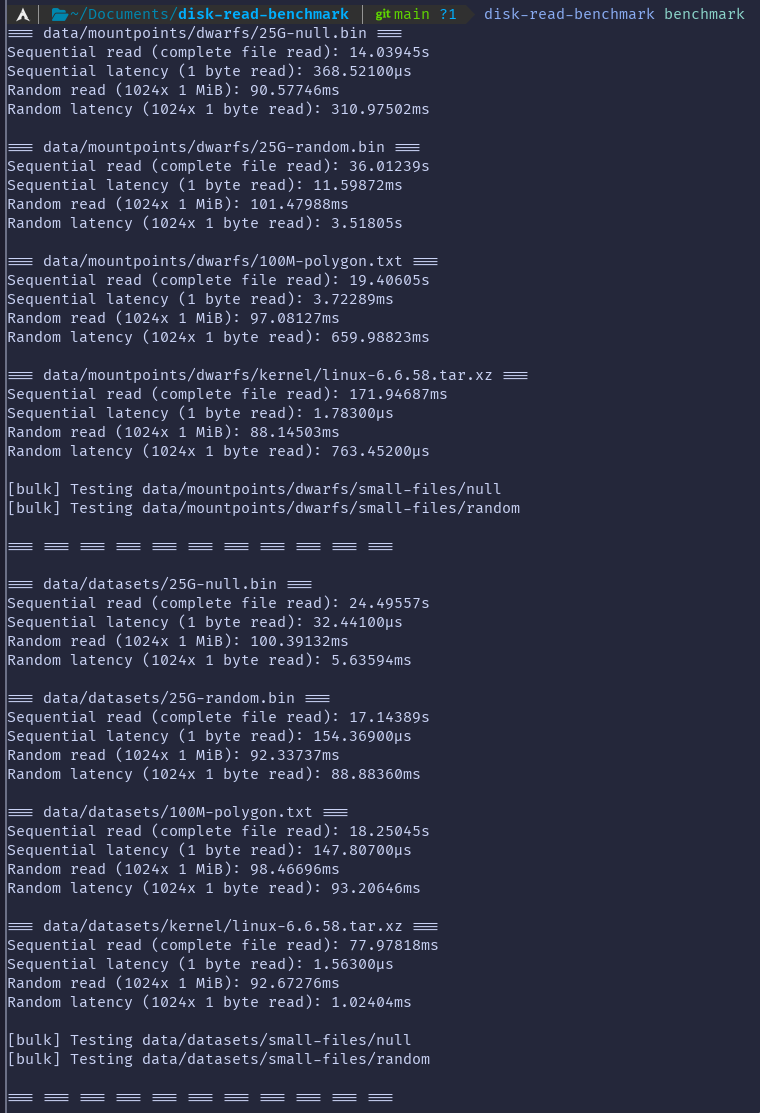

This tests the latency, sequential read, and random read speeds of a variety of data.

Installation

To install this, run the following:

git clone https://git.askiiart.net/askiiart/disk-read-benchmark

cd ./disk-read-benchmark/

cargo update

cargo install --path .

Make sure to generate and add the completions for your shell:

- bash:

disk-read-benchmark generate-bash-completions | source - zsh:

disk-read-benchmark generate-zsh-completions | source - fish:

disk-read-benchmark generate-fish-completions | source

(note that this only lasts until the shell is closed)

Running

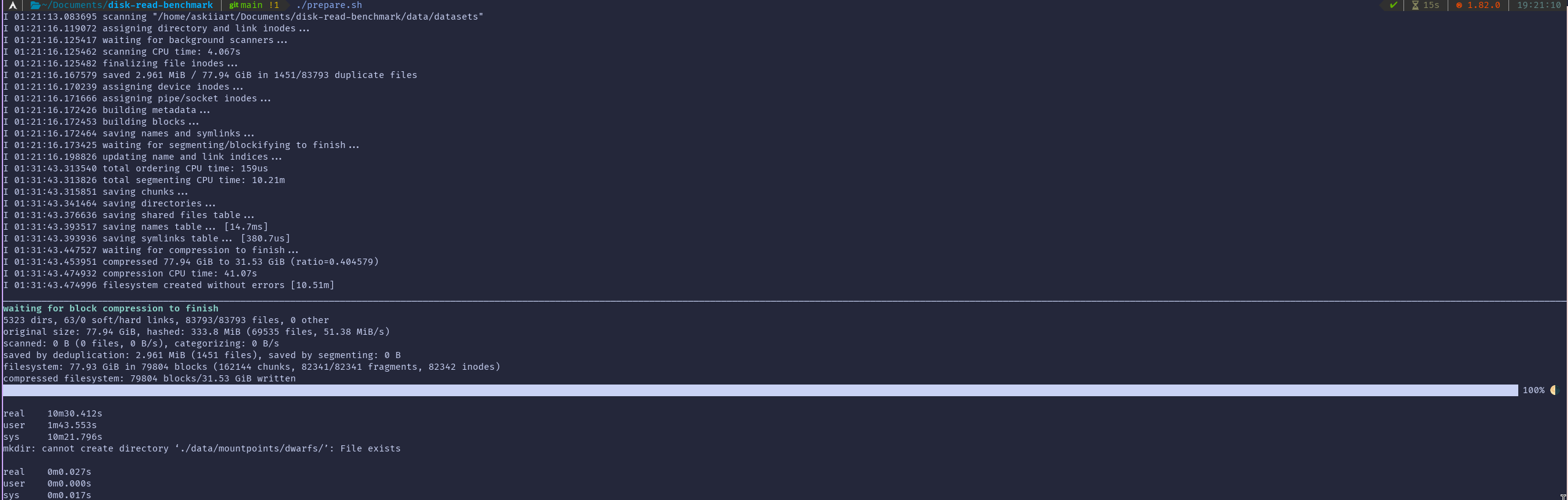

The program will automatically generate all data used, except for the regular polygon data. Once the data is generated, stop the program with Ctrl+C, then run prepare.sh to archive and mount the data using DwarFS, tar, and fuse-archive.

It will output its data at ./data/benchmark-data.csv and ./data/bulk.csv in these formats:

benchmark-data.csv:

filesystem dir,file path,sequential read time,sequential read latency,random read time,random read latency

bulk.csv:

filesystem dir,folder path,test type,time1,time2,time3,[...]

Arguments

Usage: disk-read-benchmark <COMMAND>

Commands:

generate-bash-completions Generate bash completions

generate-zsh-completions Generate zsh completions

generate-fish-completions Generate fish completions

grab-data Grabs the datasets used for benchmarking

benchmark Runs the benchmark

prep-dirs Prepares the directories so other programs can prepare their datasets

run Runs it all

help Print this message or the help of the given subcommand(s)

Options:

-h, --help Print help

-V, --version Print version

Data used

- 25 GiB random file

- 25 GiB empty file

- 1024 1 KiB random files

- 1024 1 KiB empty files

- Linux kernel source (compressed and non-compressed)

- 100 million-sided regular polygon, generated by the

headless-deterministicbranch of confused_ace_noises/maths-demos

Usage

You can put the data in folders in ./data/mountpoints/, which can be on different filesystems (including stuff like DwarFS archives mounted with FUSE). Running grab-data and prep-dirs will create all the necessary files it can, at which point you just add the polygon data, put the stuff to be benchmarked in the mountpoints dir, then run it with the benchmark argument.